The landscape of web development is undergoing a fundamental transformation as artificial intelligence becomes deeply integrated into how we interact with online content. Two significant initiatives are leading this evolution: Microsoft's NLWeb (Natural Language Web) project and Anthropic's Model Context Protocol (MCP). While both aim to revolutionize how AI systems interact with data and content, they approach the challenge from different angles and serve distinct purposes in the emerging AI-web ecosystem.

Understanding these technologies and their relationship is crucial for developers, content creators, and organizations looking to leverage AI for enhanced web experiences. This comprehensive exploration examines both initiatives, their technical foundations, practical applications, and how they complement or compete with each other in shaping the future of web interaction.

Understanding NLWeb: Microsoft's Vision for Conversational Websites

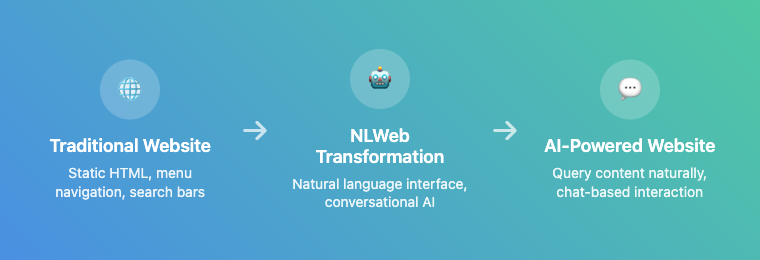

NLWeb represents Microsoft's ambitious attempt to transform traditional websites into AI-powered conversational interfaces. Conceived and developed by R.V. Guha, the legendary figure behind widely adopted web standards like RSS, RDF, and Schema.org, NLWeb addresses a fundamental shift in how users expect to interact with web content in the age of large language models.

The core premise of NLWeb is elegantly simple yet revolutionary: instead of forcing users to navigate through menus, search bars, and hierarchical page structures, websites should allow visitors to query content using natural language, much like they would interact with ChatGPT or Microsoft Copilot. This paradigm shift acknowledges that the traditional web navigation model, optimized for human browsing patterns, may not be the most efficient way to interact with content when AI can serve as an intelligent intermediary.

Technical Architecture and Implementation

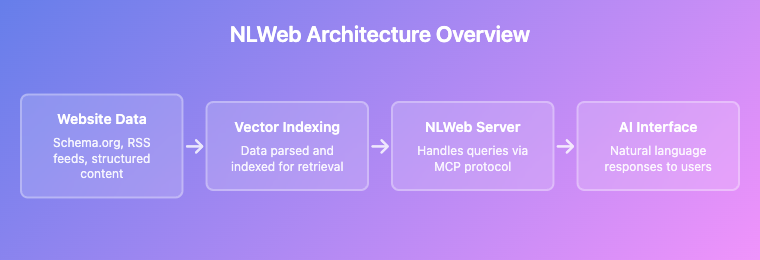

NLWeb's technical foundation rests on leveraging existing structured data formats that many websites already implement. The system intelligently parses and ingests data from Schema.org markup and RSS feeds, which provide rich, machine-readable descriptions of web content. This approach is particularly clever because it builds upon established web standards rather than requiring entirely new markup languages or content formats.

Once the structured data is extracted, NLWeb indexes it using vector retrieval services, enabling sophisticated semantic search capabilities. When users submit natural language queries, a lightweight server processes these requests, performing natural language understanding and retrieval operations to provide contextually relevant responses. This architecture ensures that responses are grounded in the actual content of the website rather than generating potentially inaccurate information.

Key Technical Components

NLWeb's implementation relies on three critical components: data ingestion from existing structured formats, vector-based indexing for semantic search, and a query processing server that handles natural language understanding. This architecture allows for rapid deployment on existing websites without requiring extensive content restructuring.

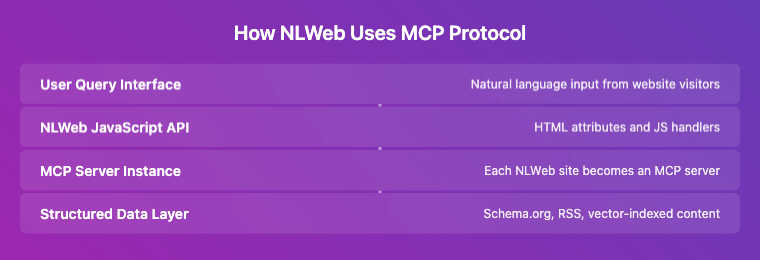

What makes NLWeb particularly accessible to developers is its integration approach. Website owners can implement conversational interfaces using simple HTML attributes and JavaScript APIs, making the technology approachable for front-end developers without requiring deep AI expertise. This democratization of AI-powered web experiences aligns with Microsoft's broader strategy of making advanced AI capabilities accessible to mainstream developers.

The MCP Connection

Perhaps the most intriguing aspect of NLWeb is its integration with the Model Context Protocol. Every NLWeb instance functions as an MCP server, supporting the core "ask" method that allows external AI systems to query website content using natural language. This design decision transforms individual websites into composable endpoints that can be discovered and utilized by AI agents and applications.

This integration represents a strategic architectural choice that extends NLWeb's utility beyond direct user interaction. By implementing the MCP standard, NLWeb-enabled websites become part of a larger ecosystem where AI applications can automatically discover and query relevant content sources, enabling more sophisticated multi-source information retrieval and cross-website intelligence.

The Model Context Protocol: A Universal Standard for AI Integration

While NLWeb focuses specifically on web content interaction, the Model Context Protocol represents a much broader initiative to standardize how AI applications connect with external data sources and tools. Developed by Anthropic and gaining significant industry support, including adoption by OpenAI, MCP functions as what many describe as "USB-C for AI applications" - providing a universal connector standard for AI systems.

The protocol addresses a fundamental challenge in AI application development: the need for LLMs to access external context and tools while maintaining security, reliability, and interoperability. Before MCP, each AI application had to develop custom integrations with various data sources, leading to fragmented, incompatible solutions that were difficult to maintain and scale.

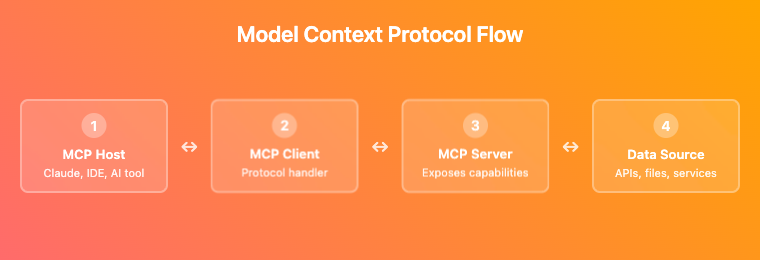

Client-Server Architecture

MCP employs a straightforward client-server architecture where host applications (such as Claude Desktop, IDEs, or other AI tools) connect to multiple specialized servers. Each MCP server exposes specific capabilities through the standardized protocol, whether that's accessing local files, querying databases, or interfacing with remote APIs.

This architecture provides several key advantages. MCP hosts can connect to multiple servers simultaneously, enabling AI applications to access diverse data sources through a single, consistent interface. The protocol includes robust capability negotiation and state management, ensuring reliable connections and proper error handling. Security and user consent mechanisms are built into the protocol specification, addressing critical concerns about data access and privacy.

Protocol Capabilities

MCP servers can expose various capabilities including structured prompts for specific tasks, access to local and remote data sources, tool execution capabilities, and resource management. The protocol's extensible design allows for custom capabilities while maintaining interoperability standards.

Industry Adoption and Ecosystem

The significance of MCP extends beyond its technical merits to its growing industry adoption. OpenAI's decision to integrate MCP across its product line, including the ChatGPT desktop application and OpenAI Agents SDK, demonstrates the protocol's practical value and industry acceptance. This cross-vendor adoption is particularly notable in an industry where proprietary standards often dominate.

The protocol's open-source nature and comprehensive specification have fostered a growing ecosystem of MCP servers for various use cases. Developers can find pre-built integrations for common data sources and tools, while the standardized approach makes it feasible to switch between different AI providers without rebuilding integrations from scratch.

Comparing NLWeb and MCP: Complementary Technologies

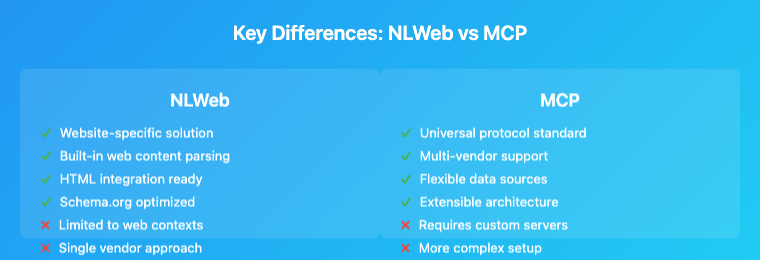

Rather than viewing NLWeb and MCP as competing technologies, it's more accurate to understand them as complementary solutions addressing different aspects of AI-web integration. NLWeb provides a specialized, turnkey solution for transforming websites into conversational interfaces, while MCP offers a universal protocol for AI applications to connect with diverse data sources.

The relationship between these technologies becomes clearer when examining their respective strengths and limitations. NLWeb excels in scenarios where website owners want to quickly implement conversational AI capabilities without extensive technical development. Its built-in understanding of web content formats, automatic parsing of structured data, and simple integration approach make it an attractive option for content-driven websites looking to enhance user experience.

Scope and Application Differences

The fundamental difference lies in scope and intended application. NLWeb is specifically designed for web content scenarios, with deep integration for HTML, Schema.org markup, and RSS feeds. This specialization enables sophisticated out-of-the-box functionality for websites but limits its applicability to web-based use cases.

MCP, conversely, provides a general-purpose protocol that can handle any type of data source or tool integration. While this flexibility requires more initial setup and custom server development, it enables AI applications to access databases, APIs, local files, and other non-web resources through a consistent interface.

Integration Complexity

NLWeb prioritizes ease of implementation for web developers, offering HTML attributes and JavaScript APIs that can be quickly added to existing sites. MCP requires developers to create or deploy server implementations but provides much greater flexibility in what data sources and capabilities can be exposed to AI applications.

Technical Implementation Comparison

| Aspect | NLWeb | Model Context Protocol |

|---|---|---|

| Primary Use Case | Conversational website interfaces | Universal AI-data integration |

| Data Sources | Web content, Schema.org, RSS | Any data source or tool |

| Implementation Complexity | Low - HTML attributes and JavaScript | Medium - Custom server development |

| Vendor Support | Microsoft-led initiative | Multi-vendor (Anthropic, OpenAI) |

| Extensibility | Limited to web content paradigms | Highly extensible protocol |

| Setup Time | Minutes to hours | Hours to days |

When to Choose Each Technology

The choice between NLWeb and MCP depends largely on specific use cases and technical requirements. NLWeb represents the optimal choice for website owners who want to add conversational AI capabilities with minimal technical overhead. News sites, documentation portals, e-commerce platforms, and content-heavy websites can benefit immediately from NLWeb's specialized approach to web content interaction.

MCP becomes the preferred choice when building AI applications that need to access diverse data sources beyond web content. Enterprise applications integrating with databases, customer relationship management systems, file storage, and custom APIs will find MCP's flexibility and extensibility essential for comprehensive AI integration.

Practical Implementation Scenarios

NLWeb in Action: E-commerce Integration

Consider an e-commerce website implementing NLWeb to enhance product discovery. Traditional product navigation requires users to browse categories, apply filters, and compare specifications manually. With NLWeb integration, customers can ask questions like "Show me waterproof hiking boots under $200 with good reviews" and receive contextually relevant product recommendations based on the site's actual inventory and structured product data.

The implementation process involves adding Schema.org product markup (which many e-commerce sites already have for SEO purposes), implementing the NLWeb JavaScript API, and configuring the natural language query interface. The result is a more intuitive shopping experience that can significantly improve conversion rates and customer satisfaction.

MCP Implementation: Enterprise Data Integration

An enterprise AI application using MCP might need to access customer data from a CRM system, financial information from an ERP platform, and document repositories simultaneously. By implementing MCP servers for each data source, the AI application can query across all systems using natural language while maintaining proper security boundaries and access controls.

This implementation requires developing custom MCP servers for each data source, but the standardized protocol ensures that once developed, these servers can be used by any MCP-compatible AI application. The long-term benefit is a reusable, standardized approach to AI-data integration across the organization.

Security Considerations

Both NLWeb and MCP implementations must carefully address security concerns. NLWeb needs to ensure that conversational interfaces don't expose sensitive information inadvertently, while MCP implementations require robust authentication, authorization, and data access controls to prevent unauthorized access to integrated systems.

Hybrid Approaches

The most sophisticated implementations may combine both technologies. A media organization might use NLWeb to provide conversational access to their published content while simultaneously using MCP to integrate with internal content management systems, subscriber databases, and analytics platforms. This hybrid approach leverages the strengths of both technologies to create comprehensive AI-powered experiences.

Future Implications and Industry Impact

The emergence of NLWeb and MCP signals a broader transformation in how we conceptualize web interaction and AI integration. These technologies represent the infrastructure layer for what many describe as the "AI Web" - an internet where natural language becomes the primary interface for information discovery and task completion.

Democratization of AI-Powered Experiences

NLWeb's low-barrier approach to implementing conversational interfaces could democratize AI-powered web experiences in ways similar to how content management systems democratized web publishing. Small businesses, individual creators, and organizations without extensive technical resources can now offer sophisticated AI interactions that were previously available only to large technology companies.

This democratization has significant implications for web accessibility and user experience. Conversational interfaces can provide more intuitive access to information for users with disabilities, non-technical users, and those who prefer natural language interaction over traditional navigation paradigms.

Standardization and Interoperability

MCP's role as a universal standard for AI-data integration could prevent the fragmentation that has historically plagued software integration efforts. By providing a common protocol that major AI providers support, MCP reduces the technical burden of maintaining multiple custom integrations while improving the reliability and security of AI applications.

The protocol's open-source nature and comprehensive specification foster an ecosystem where developers can build and share reusable integrations, accelerating the development of AI-powered applications across industries.

Economic Implications

The widespread adoption of these technologies could reshape the economics of web development and AI application creation. Reduced development costs and faster implementation times may lower barriers to entry for AI-powered applications while creating new opportunities for specialized service providers and integration specialists.

Challenges and Considerations

Despite their promise, both technologies face significant challenges. NLWeb must address concerns about information accuracy, as conversational interfaces may generate responses that seem authoritative but contain errors. Ensuring that AI-generated responses remain grounded in actual website content rather than hallucinated information requires careful implementation and ongoing monitoring.

MCP faces the challenge of achieving widespread adoption across diverse AI platforms and data sources. While early support from major providers is encouraging, the protocol's success depends on continued industry engagement and the development of comprehensive server implementations for common use cases.

Privacy and Data Governance

Both technologies raise important questions about data privacy and governance. NLWeb implementations must ensure that conversational interfaces respect user privacy and don't inadvertently expose sensitive information through natural language queries. MCP implementations require robust data governance frameworks to manage access controls and ensure compliance with privacy regulations across integrated systems.

These challenges highlight the importance of implementing proper security measures, user consent mechanisms, and data access controls from the outset rather than treating them as afterthoughts.

Looking Forward: The Evolution of AI-Web Integration

The relationship between NLWeb and MCP reflects broader trends in AI application development, where specialized solutions and universal standards coexist to address different aspects of the same fundamental challenge. As these technologies mature, we can expect to see increased integration between them and the emergence of new tools that bridge the gap between web-specific and general-purpose AI integration approaches.

Emerging Patterns and Best Practices

Early implementations of both technologies are revealing best practices and common patterns that will likely influence future development. Successful NLWeb deployments emphasize the importance of high-quality structured data, clear user expectations about conversational capabilities, and fallback mechanisms for queries that exceed the system's capabilities.

MCP implementations are demonstrating the value of modular server architectures, comprehensive error handling, and careful security design. These patterns will likely evolve into standardized frameworks and toolkits that simplify implementation for future adopters.

The convergence of these technologies with other emerging standards like WebAssembly, progressive web applications, and edge computing platforms suggests a future where AI-powered web experiences become increasingly sophisticated and performant while remaining accessible to developers and users alike.

Community and Ecosystem Development

Both NLWeb and MCP benefit from active community involvement and open-source development approaches. Microsoft's decision to publish NLWeb as an open project and Anthropic's open-source MCP specification encourage community contributions and diverse implementations that extend beyond the original creators' vision.

This community-driven approach is essential for addressing the diverse needs of different industries, use cases, and technical environments. The success of both technologies will depend on fostering vibrant ecosystems of developers, contributors, and users who can drive innovation and ensure broad applicability.

Call to Action

For developers and organizations considering these technologies, the key is to start experimenting with pilot implementations that align with specific use cases and technical capabilities. Both NLWeb and MCP offer low-risk entry points for exploring AI-web integration while building expertise and understanding for more comprehensive implementations.

Conclusion: Complementary Forces Shaping the AI Web

NLWeb and the Model Context Protocol represent complementary approaches to the fundamental challenge of integrating artificial intelligence with web content and data sources. Rather than competing technologies, they address different aspects of the same evolutionary trend toward more intelligent, conversational web experiences.

NLWeb provides a specialized, accessible solution for website owners who want to implement conversational AI capabilities quickly and effectively. Its focus on web content, integration with existing standards like Schema.org, and low-barrier implementation make it an attractive option for content-driven websites seeking to enhance user experience through natural language interaction.

The Model Context Protocol offers a universal standard for AI applications to connect with diverse data sources and tools. Its flexibility, multi-vendor support, and extensible architecture make it essential for sophisticated AI applications that need to access multiple systems and data types reliably and securely.

Together, these technologies are laying the foundation for what many describe as the AI Web - an internet where natural language becomes the primary interface for information discovery, task completion, and content interaction. The success of this transformation depends not only on the technical merits of these solutions but also on their ability to address real user needs while maintaining security, privacy, and reliability standards.

For developers, content creators, and organizations looking to leverage AI for enhanced web experiences, understanding both technologies and their appropriate applications is crucial. The choice between NLWeb, MCP, or hybrid approaches should be based on specific use cases, technical requirements, and long-term strategic goals rather than viewing them as mutually exclusive options.

As these technologies continue to evolve and mature, they promise to reshape how we interact with web content and build AI-powered applications. The future of web development increasingly involves not just creating static content or interactive interfaces, but designing intelligent, conversational experiences that can understand and respond to user needs in natural, intuitive ways.

The journey toward this AI-powered web is just beginning, and both NLWeb and MCP represent important milestones in that evolution. By understanding their capabilities, limitations, and appropriate applications, we can make informed decisions about how to leverage these powerful technologies to create better, more accessible, and more intelligent web experiences for everyone.